Can AI Be Trained on Data Generated by Other AI Models?

The concept of training artificial intelligence (AI) using data generated by other AI models may seem like an oddity, but this approach is becoming increasingly relevant. As obtaining authentic, high-quality data becomes more challenging and expensive, synthetic data generated by AI systems is gaining traction among major technology companies such as Anthropic, Meta, and OpenAI. This shift is not just a temporary trend, but a potential solution to a growing problem: how to train AI systems effectively when the data required to do so is difficult to come by.

Why Does AI Need Data?

At its core, AI is about recognizing patterns in vast amounts of data. Models are trained to make predictions or decisions based on these patterns. For instance, a machine learning model designed to recognize images might be trained using thousands of labeled images, each representing a particular class (e.g., photos of kitchens, dogs, cars, etc.). The model “learns” to recognize the key features that define these categories.

However, in order for AI to correctly identify objects, words, or even perform specific tasks, it needs access to data that is well-organized and properly annotated. Annotations are labels added to data, which provide context that enables the AI to understand what it’s looking at. Without this data, the AI might struggle to discern important features, or worse, it might misinterpret the data completely.

For example, if a photo-classification model is trained on images of kitchens but all the images are mis-labeled as “cow,” the AI will fail to recognize the photos as kitchens. This mislabeling highlights the importance of accurate and effective data labeling. And this process—data annotation—is one of the key components of training AI.

The Growing Market for Data Annotation

With the rising demand for labeled data, the market for annotation services has ballooned. According to research from Dimension Market Research, this market, currently worth $838 million, is projected to expand to $10.34 billion over the next decade. While this growth is a sign of the increasing reliance on AI, it also underscores the complexity of the data annotation process.

Data annotation typically involves humans labeling data by identifying patterns, categorizing images, or marking specific features in data. This process is time-consuming and expensive. The need for specialists, particularly when dealing with complex data, adds to the costs. For example, labeling images of medical scans requires specialized knowledge and expertise.

In many cases, these annotations are performed by workers in low-wage countries, where they may earn just a few dollars per hour, often without benefits or long-term job security. While this approach is cost-effective for companies, it highlights significant ethical and economic concerns surrounding the fairness and sustainability of the data annotation labor market.

The Challenge of Acquiring Real-World Data

Obtaining high-quality data has become increasingly difficult, primarily due to restrictions placed on publicly available datasets. As awareness of intellectual property rights grows, more companies are choosing to protect their data from being used without permission. Web scraping—the practice of extracting large amounts of data from websites—has become more difficult, with over 35% of the top 1,000 websites blocking OpenAI’s web scraper. This shift towards greater data privacy has resulted in fewer publicly accessible datasets for training AI models.

Furthermore, acquiring real-world data is expensive. Companies like Shutterstock charge tens of millions of dollars for access to their image libraries, and platforms like Reddit have profited by licensing their data to major tech companies such as OpenAI. These costs only add to the growing challenges for AI developers, who are often faced with increasingly limited access to the very data they need to create effective and reliable models.

Can Synthetic Data Solve These Problems?

Enter synthetic data. Synthetic data is generated by AI models rather than gathered from the real world. It can be created in vast quantities and tailored to meet specific needs, offering a promising solution to the growing challenges in acquiring real-world data.

The appeal of synthetic data lies in its ability to be generated quickly and cheaply. For instance, the company Writer created its AI model, Palmyra X 004, using primarily synthetic data. The development of this model cost just $700,000, a fraction of the estimated $4.6 million required to develop a similar-sized model using traditional data collection methods.

The use of synthetic data is not limited to text-based models. Companies such as Microsoft and Google have also incorporated synthetic data into their AI training processes. Microsoft’s Phi open models, for example, were trained using synthetic data, while Google’s Gemma models made use of AI-generated data. Even Nvidia, a leader in AI hardware, unveiled a model family designed specifically to generate synthetic training data.

The possibilities offered by synthetic data are vast. It can be used to train models for specific tasks, such as generating captions for videos, enhancing speech recognition systems, or creating detailed simulations for autonomous vehicles. OpenAI, for instance, has leveraged synthetic data to improve GPT-4’s Canvas feature, which powers ChatGPT’s ability to interpret and generate visual content.

Moreover, synthetic data offers an unprecedented level of customization. By adjusting the underlying parameters, developers can create datasets that address specific needs or challenges in their models. For example, synthetic data can be used to generate datasets that contain specific demographics or simulate rare events, all of which are difficult to capture in the real world.

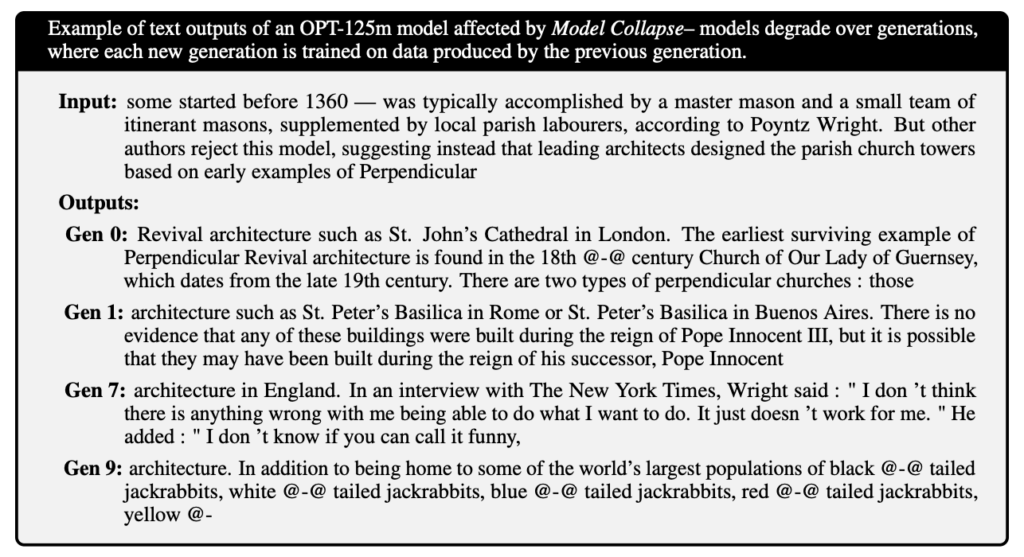

The Risks of Synthetic Data

While synthetic data offers many advantages, it also comes with its own set of risks and challenges. One major issue is that synthetic data is only as good as the original data it is based on. If the real-world data contains biases or inaccuracies, the synthetic data generated from it will likely inherit those flaws. This could lead to the propagation of existing biases in AI models trained on the synthetic data.

For example, if a dataset used to train an AI model contains mostly images of light-skinned individuals, any synthetic data generated from that dataset will likely over-represent this group. This could lead to AI models that are less effective or biased toward certain groups, particularly if the model is later deployed in real-world applications.

Moreover, synthetic data models are prone to the same “garbage in, garbage out” problem that affects all AI models. If the initial data used to train the model is flawed or unrepresentative, the synthetic data generated will also be flawed. This can result in less accurate, less diverse, and ultimately less reliable models.

Additionally, there is a risk of “hallucinations,” or the generation of errors or artifacts that can reduce the accuracy of models trained on synthetic data. These hallucinations can be difficult to identify and correct, especially in complex models. For instance, OpenAI’s o1 model could produce hallucinations that are challenging to detect and could compromise the performance of downstream AI applications.

Conclusion

The ability to train AI on data generated by other AI models is an exciting development with the potential to revolutionize the way AI systems are created. By harnessing synthetic data, companies can bypass some of the challenges associated with acquiring real-world data, such as high costs, limited availability, and privacy concerns. However, the risks associated with synthetic data—such as the perpetuation of biases and the potential for hallucinations—must be carefully managed.

As AI continues to evolve, the key to creating fair, accurate, and reliable models will lie in finding the right balance between real and synthetic data. The ongoing research and advancements in synthetic data generation will likely play a crucial role in shaping the future of AI development. As we move forward, it will be essential for developers to be mindful of the limitations and ethical considerations of using AI-generated data in order to build systems that are both effective and equitable.

Source: https://techcrunch.com/2024/12/24/the-promise-and-perils-of-synthetic-data/

Source: https://thesperks.com/chinas-revolutionary-open-source-ai-model-outperforms-industry-leaders/